ALCHIMIA Alpha Minimum Viable Prototype (MVP)

Welcome to the dawn of the ALCHIMIA platform! After the first months of hard work, we are thrilled to share the release of the alpha version of the project’s platform, which is focused on bringing innovative Federated Learning (FL) capabilities. This alpha release marks the first tangible manifestation of our collective efforts, offering a glimpse into the powerful features that will be available at the end of the project.

Federated Learning (FL) is a decentralized Machine Learning (ML) approach that enables collaborative training of a shared global model without sharing data, preserving privacy, and reducing data transfer overhead.

ALCHIMIA system allows users to create Federated Learning trainings using Atos FL, a Federated Learning framework based on the architectural pattern of pipes and filters. Atos FL is primarily influenced by two key features in ALCHIMIA’s architecture: communication between the central server and clients, and support for various privacy-preserving techniques.

Design

Atos FL employs the “pipe and filter” software design pattern, breaking down complex tasks into smaller, independent steps (filters) connected by pipes. Each filter performs a specific operation, with its output becoming the input for the next filter. The major features of this architecture are loose coupling, filter independency and reusability, and parallel processing.

Figure 1: Pipe and filter pattern scheme

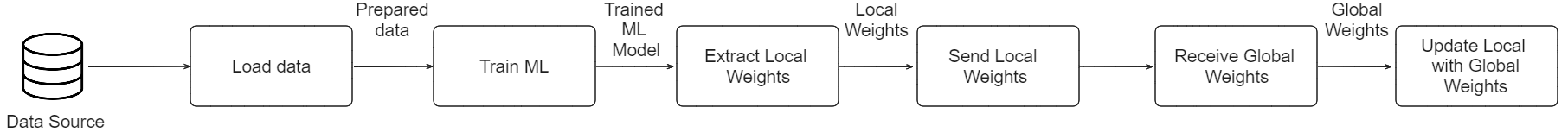

FL is an approach that enables ML training across multiple participants while keeping data decentralized and secure. In a centralized FL scenario, each client should perform at least the steps illustrated in Figure 2.

Figure 2: ALCHIMIA FL Client pipeline

Regarding the central server perspective, the minimal steps needed are displayed in Figure 3.

Figure 3: ALCHIMIA FL Server pipeline

Components

At the core of the Atos FL framework is the “Pod”, a component that encapsulates specific functionalities, such as federated server weight aggregation.

The Pod employs the listener design pattern, defining input and output wires for interaction with the external environment. Custom functionalities can be achieved by extending Pods, introducing new wires, and assigning default handlers.

Figure 4: Pod definition in Atos FL

This Pod-centric approach facilitates the implementation of communication protocols, supporting decentralized or centralized Federated Learning.

Communication

Kafka was selected as the underlying communication protocol due to its robustness, scalability, and real-time data streaming capabilities. Kafka’s robust storage mechanism ensures that messages are stored over time, allowing clients to retrieve missed updates when they come back online.

The integration of Kafka within Atos FL involves the following components:

- Producers: Publish messages to specific Kafka topics representing different communication channels.

- Consumers: Subscribe to relevant topics and receive messages in real-time.

- Topics: Serve as communication channels, organizing and categorizing messages for different aspects of the FL process such as (e.g., model updates).

Figure 5: Kafka-based communication workflow